An interesting taxonomy of some of the common turf wars in corporate IT departments. Clearly, we are not a socially deft people.

Definition Monday: Multifactor Authentication

February 14, 2011Welcome to Definition Monday, where we define and explain a common technology or security concept for the benefit of our less experienced readers. This week: Multifactor Authentication.

Authentication is a key security concept in today’s networked environments, but it’s one that is commonly both misunderstood and underappreciated.

For a long time now, the most common type of authentication on computer systems has been the password or passphrase (These terms are essentially interchangeable, though “passphrase” generally refers to a longer string of characters). Examples abound – logging into your email account, logging into your workstations, even logging into this blog to leave a comment; in each of these cases, you need to enter a username and a passphrase to verify your identity. The thought is that the username of your account might be common knowledge, but the passphrase should be a secret that is known only to the appropriate user, and so knowledge of the passphrase is de facto proof that the user requesting access is indeed the user who was issued the account.

(Tangential comment: As I often say when giving basic security lectures: passwords are not just a cruel joke perpetrated by the IT staff on unsuspecting users to make their lives more difficult. They are a means of authentication, a means of proving that you are indeed the legitimate owner of an account. The authentication leads to authorization, the assignment of proper access rights and controls to your login session, as well as accounting, the recording of your successful authentication and any particularly interesting things you do while logged in. Collectively, these are known as the AAA services and are provided by protocols like RADIUS.)

These days, though, passphrases are no longer adequately secure for some environments. They can be compromised through brute force attacks, if poorly chosen. They can be harvested from plaintext database records if a web site is poorly engineered. They can be entered by users into a phishing web site, or on a computer running a keylogging daemon. Knowledge of a passphrase is no longer an ironclad proof of identity; we need something more.

In order to mitigate this, then, some services are beginning to use multifactor authentication, requiring more than just a single passphrase to allow authentication. These additional factors can be grouped into one of three categories:

- Something You Know

The simplest factor, the passphrase, is an example of “Something You Know”. That is, the secret that the user is able to enter into the computer system is a partial proof of identity.

- Something You Have

Another factor, “Something You Have”, refers to an object that is in the possession of the user attempting to log in. Something like an RSA SecureID, for example, would count as “Something You Have”. The numbers that the SecureID generates cannot be replayed and cannot be predicted. Being able to enter the numbers into the login window is undeniable proof that the user possesses the device.

- Something You Are

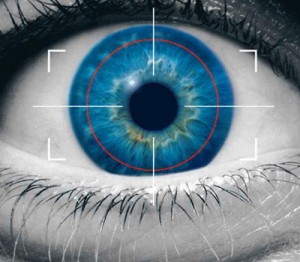

The final factor, “Something You Are”, is also known as biometrics. This encompasses fingerprint readers, retinal scanners, voiceprints, and other mechanisms that use part of the user’s anatomy as an authentication token.

Combining two or three different authentication techniques from these three broad categories is what constitutes “multifactor authentication”. Using only one of them is “single-factor authentication,” requiring two is “two-factor authentication”, and asking for something from each category is “three-factor authentication”.

Let’s take a look at this in a normal office environment. You probably have an ID Card that is used with a magstripe reader or an RFID reader to open the door at work: this is single-factor, because it only requires Something You Have. Similarly, logging into your computer with a username and passphrase is also single-factor, because it only requires Something You Know. But if you log in to your GMail account using their new phone-based authentication system, you are using two-factor: the original passphrase is Something You Know, and the mobile phone is Something You Have. Similarly, if you have something like a fingerprint reader on your portable and must enter a passphrase and swipe your finger to log in, that is also two-factor (Something You Know and Something You Are).

A word of caution: clearly, multifactor authentication architectures can make authentication more reliable. While it is easy for a passphrase to be compromised, intentionally or not, it is much more difficult to steal someone’s passphrase AND their employee ID card or mobile phone or other physical token. But when planning to deploy a system like this, it is very important to ensure that it can recover from lost authentication tokens. If you’re using a phone system like the Google example, what happens when a user loses his or her phone? Can your fingerprint reader handle a situation where a user has a cut on his or her fingertip? It is important to think through the failure scenarios as thoroughly as the successful ones.

iPhone Password Disclosure

February 10, 2011Apple’s iPhone product line doesn’t exactly have the most secure reputation – and this new attack certainly won’t help.

Researchers from Fraunhofer SIT have found a way to download all of the usernames and passwords stored in the iPhone’s keychain in a matter of minutes. A jailbreaking tool is used to install an SSH server on the phone, and then the SSH protocol is used to run a keychain access script and pull all of the credentials out. Even a “locked” phone is susceptible.

UL Approval

February 10, 2011Underwriters Laboratories, the independent product testing firm that certifies electrical and electronic devices of all stripes, is launching a new standard for security testing. UL2825, which will be officially launched on February 14, will verify that equipment can handle DDOS traffic, malicious traffic, and other adverse security conditions.

New HP Products

February 9, 2011HP has been remarkably quiet since their acquisition of Palm last year, but that might be changing soon – it looks like they will be releasing a new tablet as well as a pair of new phones. It will be interesting to see if the WebOS platform, which was an impressive product doomed by Palm’s atrocious marketing techniques, can gain a foothold in the iOS dominated smartphone and tablet market.

Openfiler

February 8, 2011If you’re looking to centralize the data storage in your enterprise – perhaps in response to a particularly persuasive and insightful article you read on the Internet somewhere – you might want to take a look at the Openfiler project.

Openfiler is a Linux distribution designed to be used as the interface for a Network Attached Storage device. Essentially, it is used to build a storage pool that the other computers in your environment can connect to in order to share data. It supports NFS, SMB, FTP, iSCSI, and a tremendous number of other acronyms. The only real annoyance is that the otherwise excellent web GUI doesn’t include any tools for setting up an iSCSI initiator, so that must be done from the command line.

HBGary Breach

February 7, 2011According to several sources, including this article at eWeek, security firm HBGary Federal is paying the price for taunting the hacktivist group Anonymous. A few days ago, HBGary claimed to have uncovered information about the leadership structure and identities of Anonymous. In retaliation, the group compromised the HBGary network, posted internal emails, and generally caused havoc.

(Update: Apparently, the root of the compromise was a social engineering attack. Someone was convinced that they were sending authentication credentials to a host behind the firewall to a legitimate user; apparently, they were incorrect. The idea that a “security” firm would be sending unencrypted email with account details in response to an unvalidated, unsigned message boggles my mind.)

Hoover Dam

February 7, 2011Part of the hype for the current “Internet Kill Switch” legislation has been evocative images of the Hoover Dam. Clearly, nobody wants the floodgates of the Hoover Dam to open due to an Internet security breach – it’s a great image, because it’s like something out of a Bond movie. So the backers of the bill have been painting that picture and hoping that the visceral dread it evokes will help carry the bill through Congress.

Only one problem – the Hoover Dam, according to the people who actually manage it, isn’t connected to the Internet.

Next thing you know, villains won’t have eyepatches and cats. What a world.

Definition Monday: Intrusion Detection Systems

February 7, 2011Welcome to Definition Monday, where we define and explain a common technology or security concept for the benefit of our less experienced readers. This week: Intrusion Detection Systems.

An Intrusion Detection System, often referred to with the abbreviated “IDS”, is exactly what it sounds like. It is a piece of hardware or software that listens to data changes or traffic in a particular environment, watching for suspicious or exploitative trends. Think of it like the high-tech version of a motion detector light on a house; it passively monitors the environment until something triggers it, and then performs a specified task. Just like the motion detector will turn on the light, the IDS will log the problem, generate an SMS text message to an administrator, or email an affected user.

Broadly speaking, there are two common classes of IDS – Network-based IDS systems (NIDS) and Host-based IDS systems (HIDS).

Network-based IDS (NIDS)

A NIDS system passively monitors the traffic in an environment, watching for certain patterns that match a defined set of signatures or policies. When something matches a signature, an alert is generated – the action that occurs then is configurable by the administrator.

The most common NIDS in use these days is probably Snort, an open-source solution written by Marty Roesch and maintained by his company, Sourcefire. Snort is capable of acting as either a passive eavesdropper or as an active in-line part of the network topology. In this diagram, the lefthand example is a passive deployment, the right is in-line.

As you can see in the example on the left, the computer running snort is connected to the firewall – the firewall would be configured with a “mirror” or “spanning” port that would essentially copy all of the incoming and outgoing traffic to a particular interface for the snort software to monitor. This way, any suspicious traffic passing the border of the network would be subject to examination.

In the example on the right, the traffic is passing directly through the snort machine, using two Ethernet interfaces. This is an excellent solution for environments where a mirror port is unavailable, such as a branch office using low-end networking equipment that can’t provide the additional interface.

(It is important to note that a NIDS should be carefully placed within the network topology for maximum effectiveness. If two of the client machines in these diagrams are passing suspicious traffic between them, the snort machine will not notice; it only sees traffic destined for the Internet. It is always possible, of course, to run multiple NIDS systems and tie all of the alerts into one console for processing so as to eliminate these blind spots.)

Because of its large install base, rules for detecting new threats are constantly being produced and published for free usage on sites like Emerging Threats. If you want to be alerted when a host on your network is connecting a known botnet controller, for example, the up-to-the-minute rules for this can be downloaded from ET. The same goes for signatures of new worms and viruses, command-and-control traffic, and more.

So a NIDS is an excellent tool for detecting when a host on your network has been compromised or is otherwise producing suspicious traffic. But what about exploits that don’t cause traffic generation? If someone compromises your e-commerce server, for example, and installs a rootkit and starts modifying the code used to generate web pages, your NIDS will be none the wiser. For more careful monitoring of individual high-priority hosts, you would use a HIDS.

Host-based IDS (HIDS)

While a NIDS watches the traffic on a network segment, HIDS watches the activities of a particular host. A common open-source HIDS system is OSSEC, named as a contraction of Open Source Security.

OSSEC will monitor the Windows Registry, the filesystem of the computer, generated logs, and more, looking for suspicious behavior. As with a NIDS, an alert will be generated by any suspicious activity on the host and the results of the alert can be set by the administrator. If a process is attempting to modify the documents on your main web server, for example, OSSEC can kill that process, lock out the account that launched it, and send an email to the system administrator’s cell phone. It’s a remarkably flexible and impressive system.

Much like a NIDS, the placement of HIDS software needs to be carefully planned. You don’t want to receive an alert every time a file is accessed on a file server, for example; your administrator will be overwhelmed, and will simply stop reading alerts altogether. The system has to be carefully configured and the monitored behaviors pruned to as to eliminate false alarms and ensure that true security issues are noticed and alerted properly.

Posted by matt

Posted by matt